11 things to remember when preparing A/B tests

A/B testing can tell you a lot about the effectiveness of your ads, design, and copy, as well as your customers’ or users’ preferences. However, in order to conduct successful A/B tests, you must keep 11 important questions in mind. Read on to see what they are.

Table of contents

A/B testing: Definition in the context of product development

There are many ways to find out whether your digital product is attractive to potential customers. One of them is based on showing them two different versions of the same piece of content - a method known as A/B testing or split testing.

A/B tests are all about discovering how specific changes affect the effectiveness or attractiveness of a website, design, or UI solution. In this form of testing, you create two versions of the same material and show them to potential customers. The first version is usually referred to as the control version (that’s the version you usually start with and want to compare with something else), whereas the second one is the variant (or challenger) version.

Suppose you want to test your landing page this way. You create two versions of your LP (let’s call them landingpage.com/1 and landingpage.com/2) and display them to potential customers at a 50:50 ratio. Half of the visitors see the first version, and half see the second version.

The goal is to determine which version resonates with your customers better. This way, you can opt for the most desirable design or copy and maximize its effectiveness (e.g. the number of conversions).

What can you test using A/B testing?

A/B testing can be used for sales or anything marketing-related. Typically, marketing or IT teams conduct A/B tests to verify:

- Websites (landing pages, product tabs, online forms, home pages, PWAs, etc.)

- Emails (newsletters, promotional emails, reminders, etc.)

- On-site elements (buttons, CTAs, banners, pop-ups, etc.)

- Ads (including their graphic design, copy, and CTA)

- Content (blog posts, product descriptions, etc. – here, A/B testing revolves around titles, length, structure, and CTAs)

You need to check several boxes to make your A/B tests successful and tangible.

How the A/B testing is done in business environment

A/B testing starts with having two versions of a given solution or marketing material. The rest depends on what you want to test. We’ll try to explain this idea using examples from the world of marketing, but the same principles apply to digital product development as well:

- If you want to test your brand new newsletter, you can send it to 50% of your recipients and send the old version to the other 50%.

- If you want to test two versions of an ad, you can upload both of them (to Google Ads, Facebook Ads, etc.) and run two simultaneous campaigns.

- Finally, if you want to test two versions of a landing page, you can split it into two subpages (e.g. 1.landingpage.com and 2.landingpage.com or landingpage.com/1 and landingpage.com/2) and distribute them via email, social media posts, or ads.

Measuring the effectiveness of A/B testing

There is no one-size-fits-all form of measuring the effectiveness of the different versions. In most cases, you need analytics software/features to measure the performance of both the control and variant versions.

For instance, MailChimp, an email marketing tool, enables you to run A/B testing (source) and then measure how each version performed (you can use the built-in analytics features for that). Major advertising platforms, including Facebook and Google, offer similar features. To some extent, measuring effectiveness happens automatically, provided you have the necessary tool/plugin in place.

How to split traffic for A/B testing

To run a conclusive A/B test, you need two (or more) equal audiences (50:50 ratio) that are large enough to give relevant feedback. Ideally, you should have at least 1,000 unique users in each group. Splitting traffic also depends on what you want to test.

If you use an advertising platform or a marketing automation tool with A/B testing functionality (and you should), they will split the traffic automatically and evenly between test groups.

6 best practices of A/B testing that we use on a daily basis

For best results when preparing an A/B test, we recommend that you follow the best practices that our product teams do. These best practices include:

- Test more rather than less – sometimes even seemingly minor changes (e.g. a CTA button’s color) can have an impact on conversions! However, you have to make sure that the change you want to test is significant – testing two very similar fonts won’t give you relevant results.

- Test one thing at a time – the experimental variant should have only one change compared to the control version. Otherwise, you can’t tell which change made the difference.

- Measure everything – for your tests to be useful, you need to know how many people saw both versions of your material and how many interacted with it.

- Exclude your employees and coworkers from counting – clicks coming from within the company can disrupt the final result.

- Use Google Tag Manager – you can create event calls directly in this tool, not in the website’s code. This way, you get more flexibility and save time.

- Consider creating a controlled testing environment – you can ask customers to participate in an online survey where they will be shown two versions of an ad or landing page. This way, you can get more thorough feedback.

5 common mistakes should you avoid when preparing for A/B tests

There are a number of common mistakes that can spoil the results of A/B tests. These mistakes are easy to avoid - as long as you know what they are. In our experience, you should look out for:

- Testing the wrong things: You should be selective. If you have a landing page that performs well, running A/B tests on it is a waste of time. Concentrate on elements and materials that don’t perform as well as you’d like.

- Testing many things at once: You can’t compare shirts and pants. Changing many elements at once will only confuse your customers, and you will never be able to tell which elements were received better. And this knowledge is extremely helpful when it comes to planning and creating future marketing materials. If you want to test many things at once, opt for multivariate testing.

- Wrong timing: Testing version A in February and version B in November won’t give you measurable results. Both versions should be tested at the same time, ideally when you will get the highest traffic.

- Not achieving test significance: The so-called P-value helps you understand whether the results you achieved are significant. For instance, if you have 100 impressions of both versions and five conversions, that’s not a significant result, and you should NOT base your marketing decisions on such limited input. Use this free calculator to calculate the P-value for your A/B tests.

- Not having enough starting data: If you start a new landing page and immediately run A/B tests on it, you don’t have enough data to create a baseline for comparison with the variant version. Take your time and gather enough information before running A/B tests.

What are the risks of preparing A/B tests the wrong way?

The risk is always the same – you can get wrong or insignificant results. And making any sales or marketing decisions based on limited or biased feedback won’t get you far. Always double-check your results and ensure the test was done correctly before introducing any major changes to your digital product.

A/B testing: Examples from the world of product development

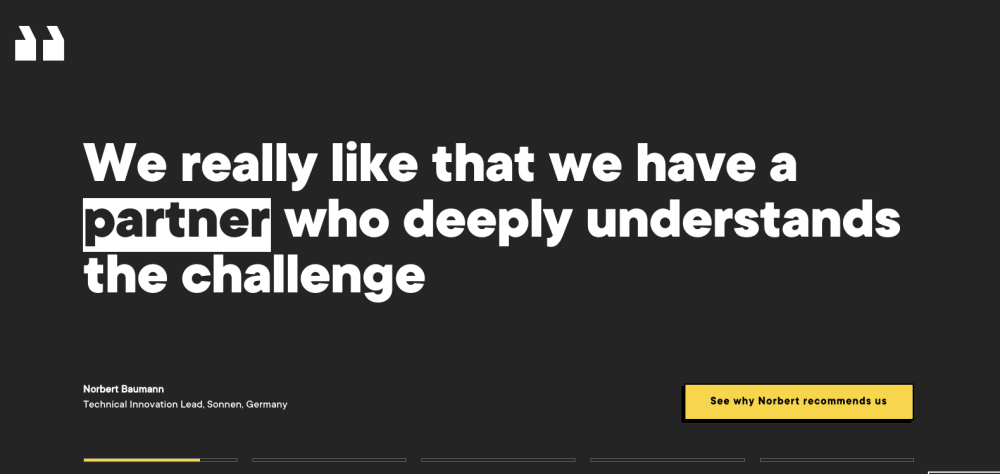

Our service page

We continuously run A/B tests to make sure that our service page offers the best possible experience. One of the tests involved checking if our Hero section is better with, or without a picture of our client.

This was our control version:

And this was our variation:

Our tests have shown that the control version (the one with the picture) had 44% more clicks on the CTA button!

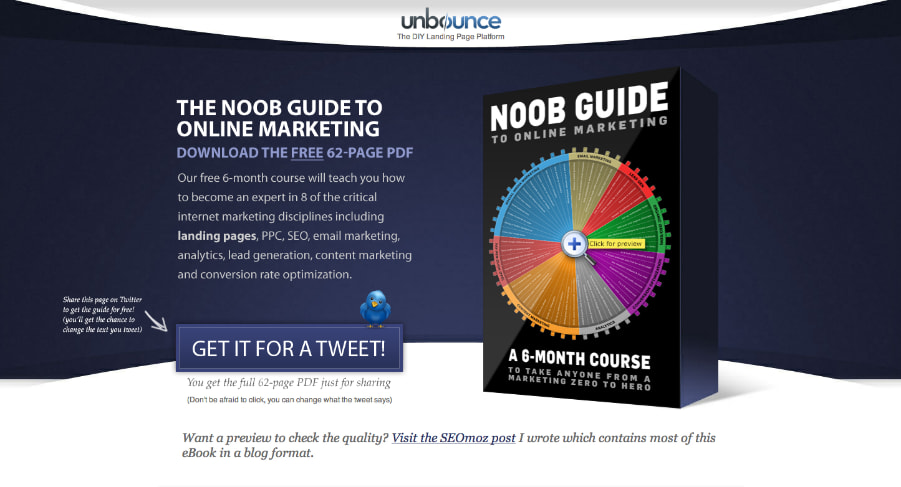

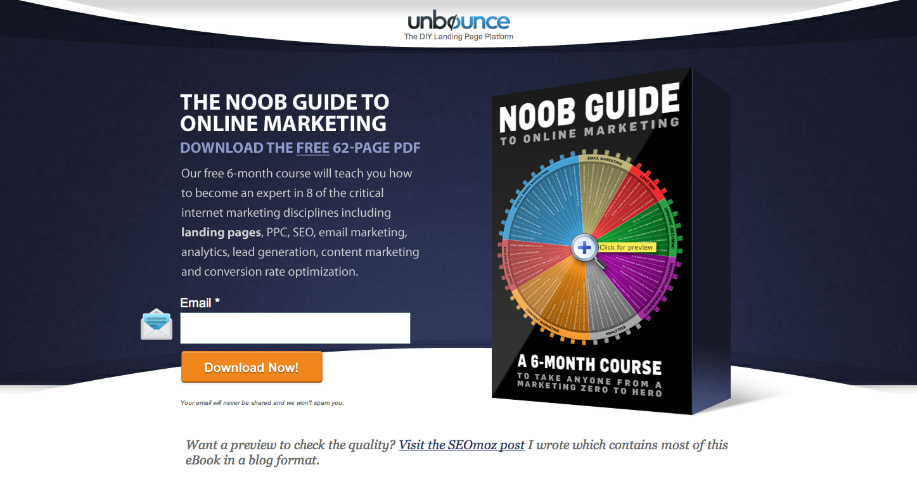

Unbounce

This digital marketing company wanted to test a landing page for their Noob Guide to Online Marketing. They wanted to check whether customers would rather give an email address or just tweet about their product.

This was the control version:

Source: https://unbounce.com/a-b-testing/pay-with-a-tweet-or-email-case-study/

And this was the variant version:

Source: https://unbounce.com/a-b-testing/pay-with-a-tweet-or-email-case-study/

The result? The email version outperformed the tweet version, gaining a 24% conversion lift by the end.

Conclusion: A/B testing works!

We hope that this article, as well as the mentioned examples, has shown you that A/B testing is a viable strategy that can improve the results you get online in a relatively short time, and with little effort! Do you want to run A/B tests on your digital product to enhance it or verify whether it’s as effective as possible? Write to us today!

Share this article: